HopeRun Software announced that it has become a strategic software partner of the open source technology Project ACRN, which will be a key component of its virtualization strategy. This is another major development for HopeRun Software’s IoT engagement. They have added support for 74 chip-level capabilities on the HiHope platform which will have a significant impact in the field of embedded virtualization such as in-vehicle, power management, and retail.

ACRN: Embedded Virtualization Open Source Platform for IoT

Embedded IoT virtualization is faced with three major challenges.

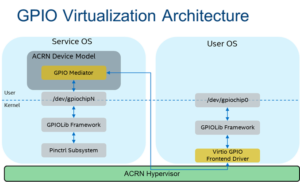

The first challenge is the need to support multiple operating systems. In the embedded ecosystem there are a variety of operating systems and software solutions when compared to the cloud environment.

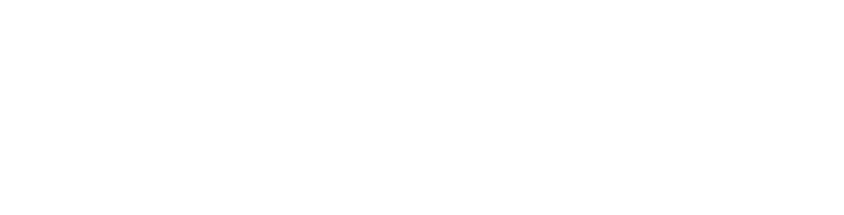

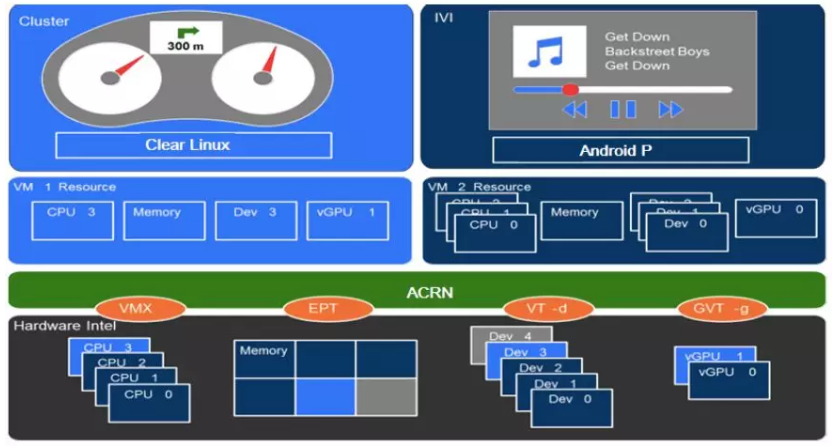

The second challenge is the support for additional hardware resource sharing. Cloud virtualization sharing covers the traditional CPU, memory, storage, and network resources. Taking the in-car scenario as an example of the IoT field, it is necessary to concurrently support the sharing of camera image processing, peripheral sharing of audio resources, and security hardware. These are not typically relevant in the cloud.

The third challenge is the integration of simultaneous security and non-security domains. These are very important in smart driving and other IoT application scenarios. Integrating both scenarios in the same hardware environment is a significant technical challenge.

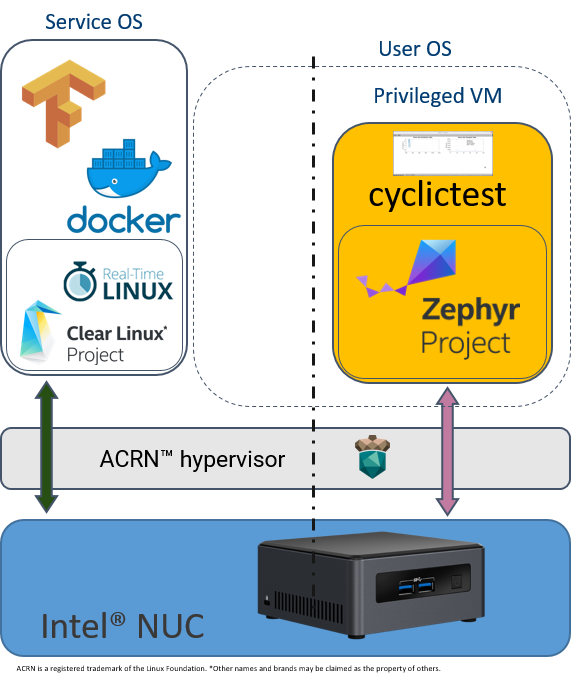

The ACRN hypervisor project was launched in March 2018 as part of the solution to these challenges. ACRN is lightweight, real-time, adaptable, open source, security, as well as others. It has become a leading open source hypervisor in the IoT field. It intentionally has a small footprint, under 25K lines of source code. It is the hypervisor designed specifically for embedded IoT.

ACRN has rich virtualization feature set as well as extensive IO sharing capabilities. It supports each operating system running as a virtual machine (VM) on the same platform for better security isolation. Hypervisor technology provides a significant advantage by enabling workloads of different types to be consolidated onto a single platform. This reduces development and deployment costs and allows for a leaner overall system architecture. This helps customers save on hardware costs while providing strong technical support for security and efficiency of the entire system.

Three advantages of HopeRun Software align with ACRN’s virtualization strategy

ACRN is an open source virtualization solution which provides the foundation for companies like HopeRun to create a productized solution. HopeRun Software has three key advantages in productizing ACRN.

First, ACRN focuses on four major embedded virtualization areas including retail, industrial, energy, and automotive. The IoT strategy announced by HopeRun Software is strategically aligned by focusing on smart retail, smart power, smart driving, etc. Through years of software engineering practice, HopeRun Software has established deep and long-term cooperative relationships with many leading customers such as Yum! China and State Grid Power in the fields of retail and power industry. HopeRun Software believes that many other major industry players will also be deploying ACRN-based complete virtualization solutions.

Second, HopeRun Software has established a technical team of nearly 100 people, with strong technology development and virtualization solutions capabilities. HopeRun Software has been following the development of ACRN technology closely since its inception.

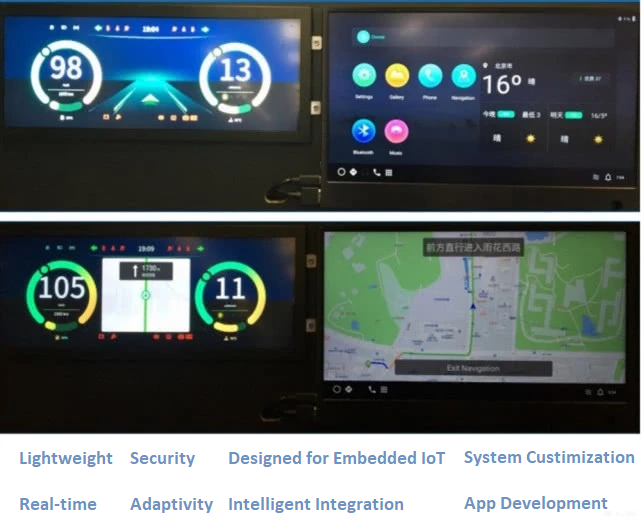

Third, HopeRun has rapidly developed its own unique integration solution capabilities such as car dashboard cluster, developing and testing and customization, development and testing for in-vehicle infotainment (IVI) system, as well as many others. At the beginning of 2019, HopeRun Software pioneered the prototype of the ACRN-based smart cockpit in China: the software-defined cockpit SDC.

ACRN and the HopeRun and HiHope Solution

ACRN is neutral governance, open source project supporting X86, ARM and a diverse set of other architectures. In the early 2018, HopeRun Software officially launched HiHope, a new generation of artificial intelligence computing open source platform. It fully supports the ARM architecture. In March of this year (2019), HopeRun Software published a list of 74 chip-level capabilities[based on ARM architecture, covering a comprehensive capability chain such as chip-level design, chip-level hardware, chip-level software, and chip-level solutions. HopeRun Software is actively planning to port the HiHope platform to the X86 architecture.

The HopeRun Software HiHope platform’s approach of “Chip + Algorithm + Application” provides an end-to-end solution. The technical and deployment system and one-stop professional delivery mode for complete pre-research, design, development and testing, provides an extensive environment for imagination in deepening strategic cooperation to rapidly expand customers and markets in the field of embedded virtualization such as retail, energy, and automotive.